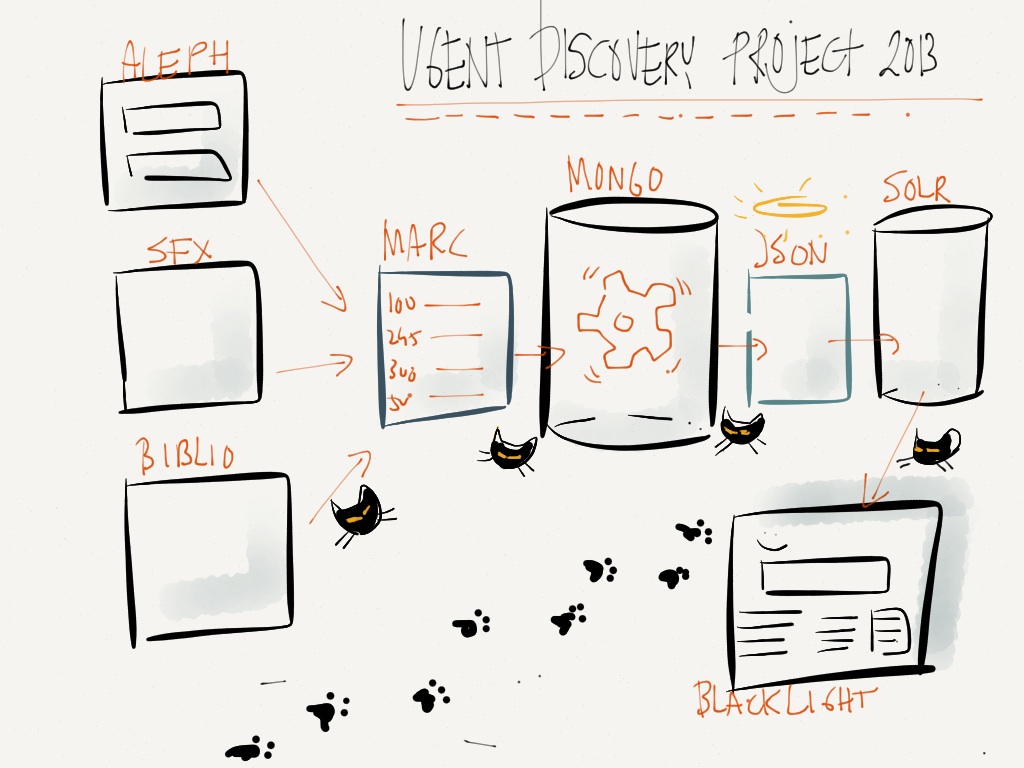

At Ghent University Library we are using Catmandu these days in a project to create a new discovery interface for our Aleph catalog. Daily we export MARC sequential files from several ALEPH catalogs and store them in a MongoDB store. Into this store we also add records from our SFX server and our institutional repository Biblio.

We use the MongoDB store to do cleaning of our datasets plus a FRBR-ized merge of records. This merge is logical in our setup. One collection contains MARC records, one other collection is used to create relations between these records. When the data is cleaned and merged, we export the data to a Solr indexer which is used by the BlackLight frontend.

In the image below the architecture is shown. The Catmandu trail is clearly visible. For importing MARC records into MongoDB we use Catmandu importers. When we have all the data in the store we run a bunch of Catmandu fixers to cleanup the data. At the end of the day we use Catmandu exporters to send the data as JSON files to Solr where we index the data and make it available in BlackLight.